Personal Server

In 2017, while deploying a physical server in Vancouver, I began exploring alternatives to the current paradigm of centralized cloud hosting for content we create and own.

Technical Background

My understanding of communications infrastructure began in the 1990s, as I helped to build and maintain internal email, calendar, and web servers over 128K ISDN lines. Later my work evolved into designing computer networks and telephone systems, which combined with interactions with telecom engineers, deepened my appreciation for how our infrastructure was designed to operate.

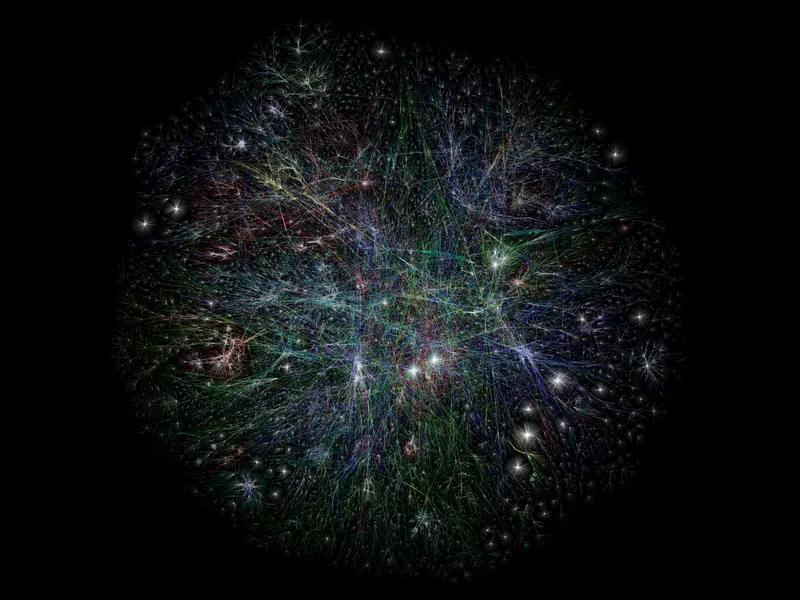

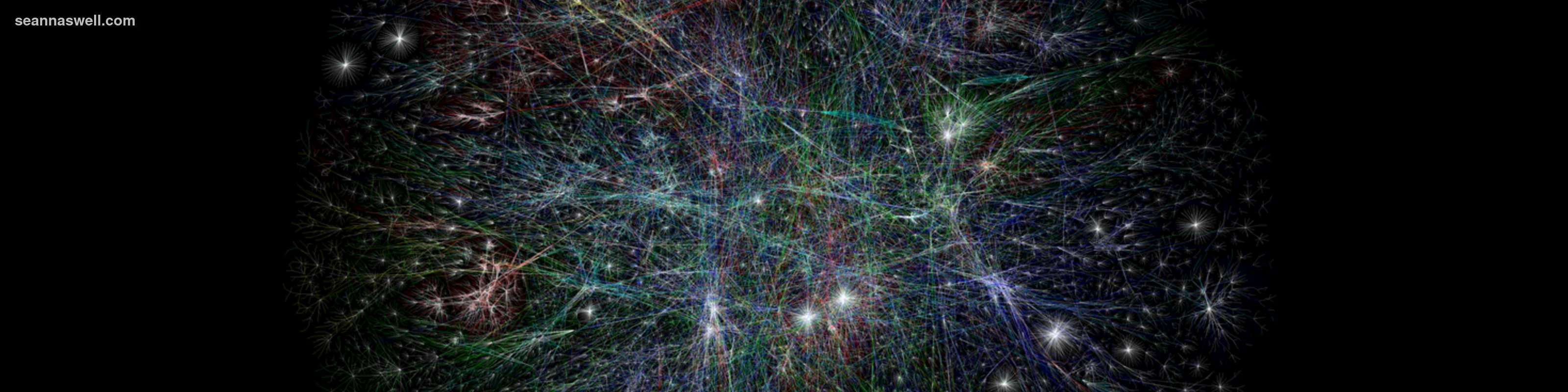

AT&T's Long Lines system was built to route around nuclear blasts and points of failure. The fiber optic network that replaced it maintained this principle: no single point of failure. Regional outages shouldn't disable national communications. Yet the current trend of outsourcing critical services to a handful of distant "cloud" providers creates fragility—communities that cannot operate autonomously, local services dependent on infrastructure thousands of miles away.

The Design

I designed a decentralized hosting architecture that returns to the internet's original design philosophy while addressing modern usability needs:

- Local hardware device at home/office storing all user data (photos, articles, websites)

- Synchronized public-facing servers operated by regional hosting providers

- Intuitive GUI enabling non-technical users to manage their infrastructure

- Provider portability allowing seamless migration without data lock-in

- Regional compliance handled at the provider level, not centrally by mega-corps

The business model creates revenue for hosting partners (managing public infrastructure), users (optional monetization of their content), and developers (plugins extending core functionality). Existing ISPs and hosting providers could license the server-side software as an additional service offering.

Technical Foundation

The architecture leverages decades of open-source Unix development, proven protocols, and existing tools—inspired by Ted Nelson's Project Xanadu. All required technology exists; it simply needs proper integration and user-friendly implementation.

Status

This project remains viable for development when timing and partnerships align. The concept addresses real infrastructure fragility issues, while returning ownership and control to content creators.